Introduction

Artificial intelligence is all around us, but the type of AI that exists today isn't much more than a mathematical algorithm that solves optimisation and classification problems. It might perform better than humans at some narrow, specific tasks but it falls behind in so many other things. As AI researcher Yoshua Bengio said, "I don't think we're anywhere close today to the level of intelligence of a two-year old child." But is there a possibility for AI superintelligence in the future? If you asked this question to a hundred different people, ninety would probably say yes. Let's explore the argument supporting the case for artificial superintelligence.

Before we dive into the various arguments, I'll briefly define superintelligence in the AI sense. According to Oxford philosopher Nick Bostrom, superintelligence is "an intellect that is much smarter than the best human brains in practically every field, including scientific creativity, general wisdom and social skills". It is this definition that we will be working with for the rest of this post.

If Human-level Intelligence, then Superintelligence

The question of whether superintelligence can be achieved is closely linked to whether human level intelligence can be achieved by AI. Why, do you ask?

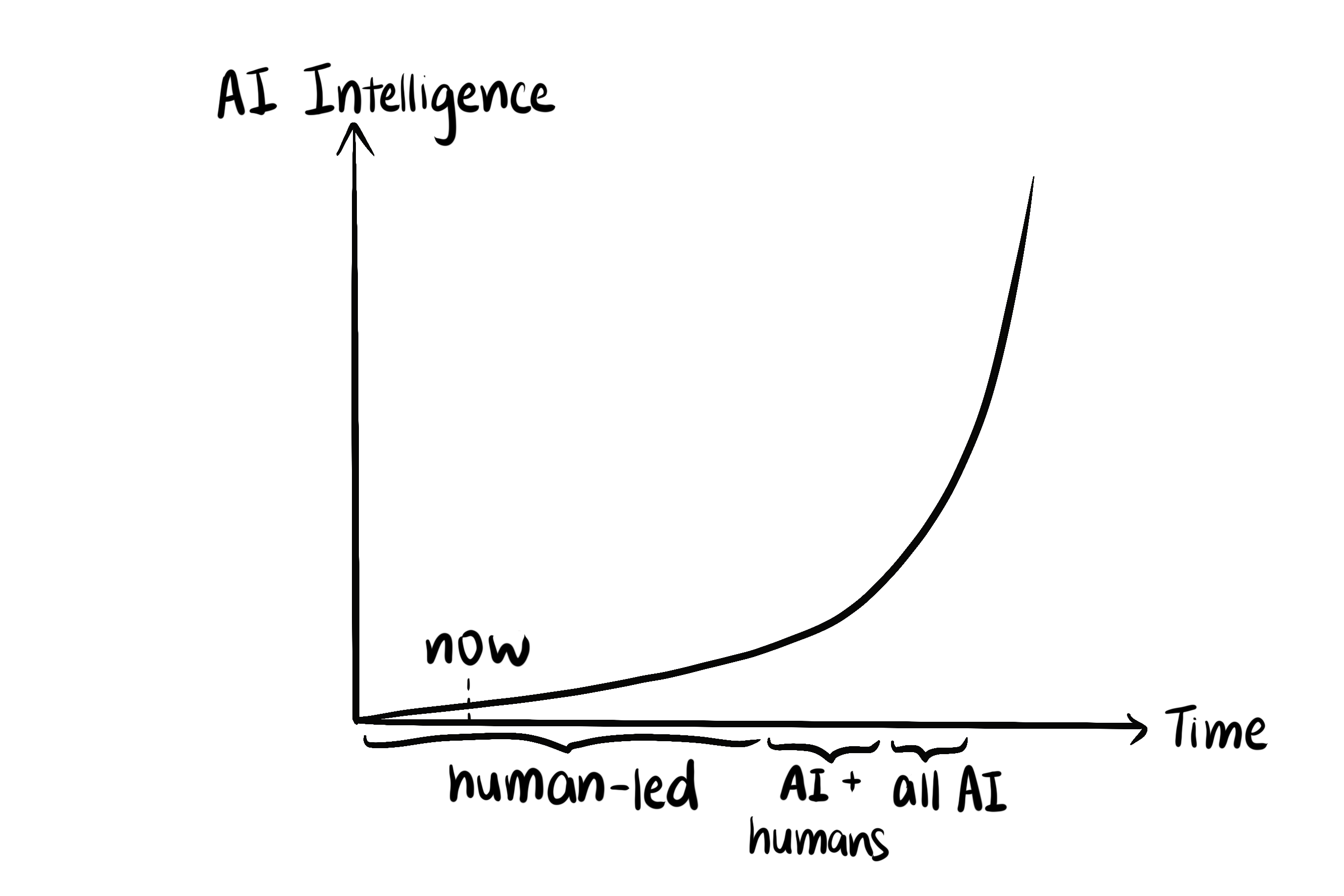

Current efforts in AI research is completely dominated by human-led initiatives. Humans do the work in research and development, which gradually "increases" the intelligence of AI (take the intelligence of AI be that of the most intelligent AI in the planet at the specific time).

However, the minute AI reaches the human intelligence level, it can partake in research and help out with the progress of the field. It's not just humans putting in the effort now, you have an artificial being (or multiple artificial beings) that can do just as well as a human can. And all this time, the intelligence of AI is creeping up, day by day.

Over time, AI intelligence becomes smarter and smarter. Its efforts in AI research also becomes more and more significant as it can contribute smarter ideas and make bigger breakthroughs. And because AI is essentially doing research that improves itself, every breakthrough that is made in the field directly contributes to the rate of AI research and development. In short:

$$ \frac{dI}{dt} \propto I $$

For those that are familiar with mathematics, you will know that this solves to form an exponential equation. For those that are less familiar, it is enough to know that as time passes, the rate of breakthroughs in the field of artificial intelligence will increase rapidly over time. This means that once AI starts partaking in AI research, an "intelligence explosion" will occur and superintelligence will soon come into existence.

It is important to note that at this point, AI doesn't have to reach human level at absolute everything. In fact, there is only one thing that it needs to reach human level with: AI research. As long as it can conduct AI research as well as a human come, everything else will come. This could potentially lower the bar for what truly is necessary for an artificial superintelligence to exist.

State of the Art

Now that we established that superintelligence is ultimately achievable with human level artificial intelligence, the question now becomes "Is human level AI achievable?" The instinctive answer (at least for me) is yes, but it takes a bit more consideration to understand why this is the case.

Firstly, I would like to make the case for AI that is achieved using a method other than deep learning. Current AI research is heavily focused on deep learning. You know the buzzwords: neural networks, natural language processing, optical character recognition. All these have their roots in deep learning. However, this doesn't mean that artificial superintelligence must come from deep learning. It very well might, and I might add that there is a decent chance that it will, but there is also another method to human-level AI (also called artificial general intelligence, or AGI) that is more sure-fire, even though it might take a long time. But time isn't really the main issue that we're exploring here, it's sufficient to know that AGI is imminent. And this method is whole brain emulation.

Whole brain emulation, as its name suggests, is the process of creating a complete model of the human brain within a computer. The hope is that the brain can be scanned and "uploaded" in sufficient detail such that it can be ran on hardware that is not biological in origin, and function similarly to a brain would within a human body.

It might seem hard to imagine at the current day and age, but the whole process of developing whole brain emulation might not be as impossible as you think. Yes, there's a lot to do before the technology can be realised. Firstly, you have to figure out how to scan a brain in sufficient resolution to be able to emulate everything down to neuron activity. You would then need to be able to convert the raw scanning data into a computational model accurate enough to represent the brain. Everything from mapping the network of neurons to the strength of the connections, the model must be able to simulate processes similar to that of the human brain. Then there's the whole issue of running the brain on hardware that is sufficiently efficient enough to simulate brain processes. All of this is something that currently can't be accomplished. So why am I saying that it's one of the more sure-fire ways of reaching AGI?

In essence, the argument is that there's no reason why something that can be achieved biologically can't be achieved artificially. We already have a fully working model in the form of a human brain, all we need to do is to replicate it using man-made materials instead of biologically manufactured ones. If there isn't something that is fundamentally impossible to replicate (like a soul), then there's no evidence to suggest that the path of whole brain emulation is unachievable.

The clarity of the path to whole brain emulation means that it's almost certainly a long way away, but it also means that we can be relatively certain of its achievability. In the end, AGI may very well not stem from whole brain emulation. But the existence of such a method proves the inevitability of the technology, which then suggests that superintelligence will come into existence sooner or later.

Conclusion

In essence, the argument is that "if human level intelligence is achieved, then superintelligence will be achieved" and "human level intelligence will be achieved by whole brain emulation if by nothing else". I've taken a lot of the ideas from Nick Bostrom's "Superintelligence", and pieced them together to form an (hopefully) cohesive argument about why artificial superintelligence is not only possible, but imminent.

You would have noticed that I haven't mentioned anything about the dangers and risks of developing AI. And there certainly is many. This is a blog post for another time, but in this one I just wanted to attempt to form an argument about why superintelligence will happen in the first place, so that everything else can build on top of this.

If you have any thoughts about this topic, I would be very interested to hear what you think!

Comments

Write a CommentNo comments yet. Be the first!